|

GemaCoreLib

The GeMA Core library

|

|

GemaCoreLib

The GeMA Core library

|

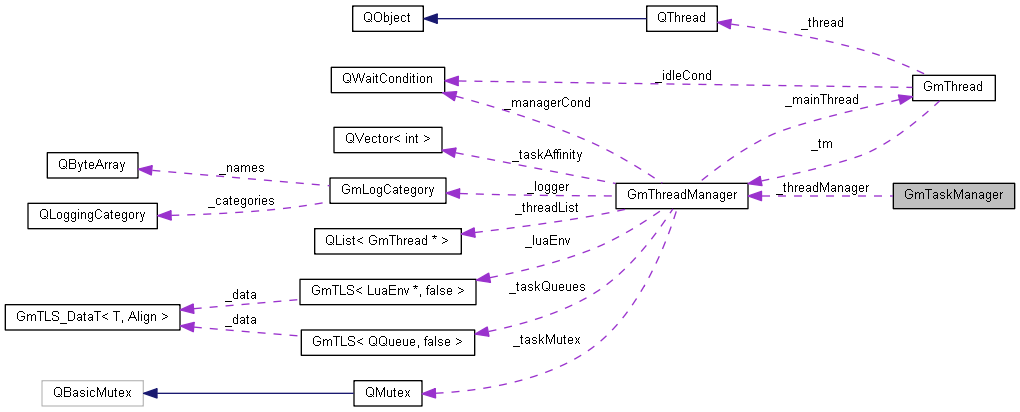

Task manager used for handling parallel executions. More...

#include <gmTaskManager.h>

Public Types | |

| enum | NodePartitionStrategy { NPS_DEFAULT = -1, NPS_SEQUENTIAL_BLOCK = 0, NPS_NUM_STRATEGIES } |

| Partition strategies for a parallel node loop. More... | |

| enum | CellPartitionStrategy { CPS_DEFAULT = -1, CPS_SEQUENTIAL_BLOCK = 0, CPS_NUM_STRATEGIES } |

| Partition strategies for a parallel cell loop. More... | |

Public Member Functions | |

| GmTaskManager (GmSimulationData *simData, GmThreadManager *tm) | |

| Basic constructor. | |

| GmThreadManager * | threadManager () const |

| Returns the associated thread manager. | |

| int | numTasks () const |

| Returns the number of tasks added to the queue by addNodetasks or addCellTasks. | |

| template<class T > | |

| GmThreadTaskResult | runParallelNodeLoop (const GmMesh *m, GmAffectedNodes affNodes, T &taskObj, int ntasks=0, NodePartitionStrategy strategy=NPS_DEFAULT, int nworkers=-1, const QVector< int > &taskAffinity=QVector< int >()) |

| Parallel executes the given task over mesh nodes. More... | |

| template<class T > | |

| void | execParallelNodeLoop (const GmMesh *m, GmAffectedNodes affNodes, T &taskObj, int ntasks=0, NodePartitionStrategy strategy=NPS_DEFAULT, int nworkers=-1, const QVector< int > &taskAffinity=QVector< int >()) |

| Similar to runParallelNodeLoop() but raising a lua error if the execution was either canceled or aborted. | |

| template<class T > | |

| GmThreadTaskResult | runParallelCellLoop (GmCellMesh *m, bool activeOnly, T &taskObj, int ntasks=0, CellPartitionStrategy strategy=CPS_DEFAULT, int nworkers=-1, const QVector< int > &taskAffinity=QVector< int >()) |

| Parallel executes the given task over mesh cells. More... | |

| template<class T > | |

| GmThreadTaskResult | runParallelCellLoop (GmCellGroupSet *gs, bool activeOnly, T &taskObj, int ntasks=0, CellPartitionStrategy strategy=CPS_DEFAULT, int nworkers=-1, const QVector< int > &taskAffinity=QVector< int >()) |

| Similar to runParallelCellLoop(GmCellMesh*, ...) but receiving a CellGroupSet as the cell source instead of the mesh. | |

| template<class T > | |

| void | execParallelCellLoop (GmCellMesh *m, bool activeOnly, T &taskObj, int ntasks=0, CellPartitionStrategy strategy=CPS_DEFAULT, int nworkers=-1, const QVector< int > &taskAffinity=QVector< int >()) |

| Similar to runParallelCellLoop() but raising a lua error if the execution was either canceled or aborted. | |

| template<class T > | |

| bool | addNodeTasks (const GmMesh *m, GmAffectedNodes affNodes, T &taskObj, int ntasks, NodePartitionStrategy strategy, int nworkers, const QVector< int > &taskAffinity, bool *ok) |

| Worker function for adding node tasks to the thread manager. See runParallelNodeLoop() for parameter descriptions. Returns false if no tasks where added to the queue. That can be due to the mesh having no nodes of the specified affNodes or due to an error. The ok parameter is filled with false on errors, true if not. More... | |

| template<class M , class T > | |

| bool | addCellTasks (M *m, bool activeOnly, T &taskObj, int ntasks, CellPartitionStrategy strategy, int nworkers, const QVector< int > &taskAffinity, bool *ok) |

| Worker function for adding cell tasks to the thread manager. See runParallelCellLoop() for parameter descriptions Returns false if no tasks where added to the queue. That can be due to the mesh having no (active) cells or due to an error. The ok parameter is filled with false on errors, true if not. More... | |

Static Public Member Functions | |

| static NodePartitionStrategy | str2NodePartitionStrategy (QString str) |

| Converts a string into a NodePartitionStrategy. Unknown strings are converted to 'default'. | |

| static CellPartitionStrategy | str2CellPartitionStrategy (QString str) |

| Converts a string into a CellPartitionStrategy. Unknown strings are converted to 'default'. | |

Private Member Functions | |

| void | adjustExecParameters (int *ntasks, int *nworkers) |

| Adjusts task execution parameters, converting possible default options to real values. | |

| QVector< GmNodeTaskDataSet * > | buildNodePartition (const GmMesh *m, int n, GmAffectedNodes affNodes, NodePartitionStrategy strategy) const |

| Creates a list with the 'n' parallel node tasks to be executed, given the destination mesh, the desired affected nodes and the partition strategy. | |

| template<class M > | |

| QVector< GmCellTaskDataSet * > | buildCellPartition (M *m, int n, bool activeOnly, CellPartitionStrategy strategy) const |

| Creates a list with the 'n' parallel cell tasks to be executed, given the destination mesh, if inactive cells should be considered or not and the partition strategy. | |

Private Attributes | |

| GmThreadManager * | _threadManager |

| The thread manager used to run tasks in multiple threads. | |

| int | _defNumTasks |

| The default number of tasks for a parallel loop. Negative numbers are multiples of the number of workers. | |

| NodePartitionStrategy | _defNodeStrategy |

| The default strategy for node partitioning. | |

| CellPartitionStrategy | _defCellStrategy |

| The default strategy for cell partitioning. | |

Task manager used for handling parallel executions.

| bool GmTaskManager::addCellTasks | ( | M * | m, |

| bool | activeOnly, | ||

| T & | taskObj, | ||

| int | ntasks, | ||

| CellPartitionStrategy | strategy, | ||

| int | nworkers, | ||

| const QVector< int > & | taskAffinity, | ||

| bool * | ok | ||

| ) |

Worker function for adding cell tasks to the thread manager. See runParallelCellLoop() for parameter descriptions Returns false if no tasks where added to the queue. That can be due to the mesh having no (active) cells or due to an error. The ok parameter is filled with false on errors, true if not.

< The user task callable object to be run

< The cell partition assigned to this task

| bool GmTaskManager::addNodeTasks | ( | const GmMesh * | m, |

| GmAffectedNodes | affNodes, | ||

| T & | taskObj, | ||

| int | ntasks, | ||

| NodePartitionStrategy | strategy, | ||

| int | nworkers, | ||

| const QVector< int > & | taskAffinity, | ||

| bool * | ok | ||

| ) |

Worker function for adding node tasks to the thread manager. See runParallelNodeLoop() for parameter descriptions. Returns false if no tasks where added to the queue. That can be due to the mesh having no nodes of the specified affNodes or due to an error. The ok parameter is filled with false on errors, true if not.

< The user task callable object to be run

< The node partition assigned to this task

| GmThreadTaskResult GmTaskManager::runParallelCellLoop | ( | GmCellMesh * | m, |

| bool | activeOnly, | ||

| T & | taskObj, | ||

| int | ntasks = 0, |

||

| CellPartitionStrategy | strategy = CPS_DEFAULT, |

||

| int | nworkers = -1, |

||

| const QVector< int > & | taskAffinity = QVector<int>() |

||

| ) |

Parallel executes the given task over mesh cells.

| mesh | The mesh |

| activeOnly | Should the tasks traverse active cells only? |

| taskObj | The task to be executed over mesh cell groups. This is a function object (a function pointer or an object with operator()) that will be called in each worker thread receiving as parameters a pointer to its own GmCellTaskDataSet object and a pointer to the const GmThread object in which it is being executed. It should return a GmThreadTaskResult value. |

| ntasks | The number of cell groups in which the mesh will be partitioned. If less than the number of workers, the used number of workers will be decreased to match. If greater, tasks will be queued and sent to workers as they become available. If 0, the default number of tasks will be used. If > 0, that number will be used. If < 0, the number of tasks will be equal to the effective number of workers multiplied by the absoulte value of the given number of tasks (-1 equal the number of workers, -2 twice the number of workers and so on). |

| strategy | The startegy that will be used to create cell groups. |

| nworkers | The number of desired worker threads. If the desired value is greater than the maximum value supported by the thread manager, the maximum value will be used. If -1, the default number of workers configured in the thread manager will be used. A value of 0 means that the tasks will be executed by the main thread. |

| taskAffinity | An optional vector defining the requested thread id for executing each task. Should be either an empty vector (no thread locking will be used) or a vector with the same number of entries as the number of tasks that will be effectively used in the call. See also the comments on GmThreadManager::addTask() |

GM_THREAD_CANCELED if the executions has been canceled or GM_THREAD_ABORTED on execution errors.

| GmThreadTaskResult GmTaskManager::runParallelNodeLoop | ( | const GmMesh * | m, |

| GmAffectedNodes | affNodes, | ||

| T & | taskObj, | ||

| int | ntasks = 0, |

||

| NodePartitionStrategy | strategy = NPS_DEFAULT, |

||

| int | nworkers = -1, |

||

| const QVector< int > & | taskAffinity = QVector<int>() |

||

| ) |

Parallel executes the given task over mesh nodes.

| mesh | The mesh |

| affNodes | The type of nodes that should be traversed (geometry, ghost or both) |

| taskObj | The task to be executed over mesh node groups. This is a function object (a function pointer or an object with operator()) that will be called in each worker thread receiving as parameters a pointer to its own GmNodeTaskDataSet object and a pointer to the const GmThread object |

in which it is being executed. It should return a GmThreadTaskResult value.

| ntasks | The number of node groups in which the mesh will be partitioned. If less than the number of workers, the used number of workers will be decreased to match. If greater, tasks will be queued and sent to workers as they become available. If 0, the default number of tasks will be used. If > 0, that number will be used. If < 0, the number of tasks will be equal to the effective number of workers multiplied by the absoulte value of the given number of tasks (-1 equal the number of workers, -2 twice the number of workers and so on). |

| strategy | The startegy that will be used to create node groups. |

| nworkers | The number of desired worker threads. If the desired value is greater than the maximum value supported by the thread manager, the maximum value will be used. If -1, the default number of workers configured in the thread manager will be used. A value of 0 means that the tasks will be executed by the main thread. |

| taskAffinity | An optional vector defining the requested thread id for executing each task. Should be either an empty vector (no thread locking will be used) or a vector with the same number of entries as the number of tasks that will be effectively used in the call. See also the comments on GmThreadManager::addTask() |

GM_THREAD_CANCELED if the executions has been canceled or GM_THREAD_ABORTED on execution errors.

1.8.15

1.8.15